A strategy to dedupe records in Salesforce (or in any other system)

Too many of the same record is a problem! Here are some steps to find and remove duplicate items. Read: "Everything Starts Out Looking Like a Toy" #136

Hi, I’m Greg 👋! I write essays on product development. Some key topics for me are system “handshakes”, the expectations for workflow, and the jobs we expect data to do. This all started when I tried to define What is Data Operations?

This week’s toy: a project to create a battery-free Game Boy emulator. You read that correctly. It’s a device that uses a combination of solar cells and capacitive button-pushing energy capture to power a device that runs Game Boy games. This is the first of (hopefully) many devices that are battery-free versions of other devices we use. Edition 136 of this newsletter is here - it’s March 13, 2023.

The Big Idea

A short long-form essay about data things

⚙️ A strategy to dedupe records in Salesforce (or in any other system)

It probably happens several times a day for many Sales ops or Marketing ops pros. A Slack message arrives, stating “I found a duplicate account in the CRM.” If you’ve got a solid team and a process for resolving these accounts, there may even be a ticketing workflow set up when these things happen to make it easy to resolve.

Finding duplicate accounts (or any other object) is pretty easy on a one-off basis. Most people will be able to help you find an errant account that got created from some weird process and doesn’t match the ownership of their current account. So you use a native process (if you’re using Salesforce) or perhaps a tool to resolve the issue, and you’ve solved that issue.

But what about doing this at scale? It’s easy for this process to get out of control if you don’t put some logic in place. If your duplicate process takes a few minutes to be reviewed by a person and then that person needs to decide how to combine these records, merging records becomes a significant bottleneck.

Duplicate Records need to be solved by automation

So you’ve decided that you want to automate your dedupe process and combine records automatically. How does this work?

If you think of this process in pseudocode, you’ll want to:

Define a set of rules that would make an entity unique. For example, if you were looking at Accounts, you might decide the account record is defined by a unique combination of Name, Billing Country, and Website address.

Whenever an Account record is saved, if this duplication standard is encountered, you have a few choices. You can raise an error and either refuse to complete the save action, offer to combine matching records or place this record into a queue where it can be combined.

When you combine records, determine tie-breaking conditions that identify the “winning” record among a series of matching records and then decide how to combine fields from losers into winners (or to take only winning values).

This is going to look slightly different for each entity that you manage

When you do this process manually it’s hard to do anything but fill in missing data or perhaps prioritize values from one record or the other based on more original or more recent data. That’s not going to work when you try to keep up with rapidly changing data.

An example: managing duplicate records in Salesforce

If you’re using Salesforce, there are some built-in methods to solve this problem.

Matching rules help you to understand when you have a duplicate object (account entity or otherwise. This means that you can define a field or combination of fields (e.g. the first 8 characters of the account name, combined with the country and website) to identify when you have a duplicate.

These matching rules, when enabled, let you do two things:

Alert users when they are using the app and if they have sufficient permissions, commit a merge when there are duplicate rules that are triggered.

Run a series of duplicate jobs that automatically merge most records when there is no conflict.

There are a few challenges with this approach. When you’re dealing with certain entities like accounts, merging accounts can change ownership. This means that the change might need to be confirmed by a sales operations team or other admin.

Another issue is that you can’t auto-trigger a flow based on the results of a merge using duplicate jobs. When a conflict happens because it’s a failed merge, you won’t be able to create an easily automated sequence to deal with the issue.

While you are thinking about how best to deal with these duplicates, there is another series of questions that need to be resolved:

What happens when the duplicates correspond to records in a different system than Salesforce that doesn’t have native duplicate management?

How do you protect certain records from entering “duplicate management” e.g. customers?

How do you combine records by field (or by groups of fields) once you identify duplicates?

One way to handle these conflicts in records is to identify a way to uniquely identify records using data from other systems.

How to create an entity that spans systems

If each record has a unique key, you can do two things:

use the information held in other systems to validate which field information is reliable and should be kept.

create an entity where part of the information is held in Salesforce and part of it is held elsewhere.

The simple way to think of this is as a series of connected keys. The Salesforce record that you want to keep has a relationship with other systems.

To do this by hand if you don’t already have information distributed across systems:

Create a report listing all of your accounts, including the Salesforce ID, name, country, domain, and any other fields that are potentially unique, like customer status.

Add a single field to your Salesforce object indicating whether the record is canonical (the record that should survive).

Create a composite key concatenating the account’s country with the first several characters of the account name with the website domain name.

Export the report and pivot it on the composite key - if you get more than one match you know you have a duplicate.

For each pair that you have, you’ll need to decide which is the winning record and which records should be losers. Remember that this is recursive - you keep finding duplicates until they don't meet your criteria, so you'll have to do this either whenever you're asked or on a regular basis.

Putting the pieces back together

When you merge two records by deciding the winner, you need to copy the right field values into the right place.

How do you decide? Some ways you might consider differentiating between field values include:

Selecting the oldest value

Selecting the newest value

Selecting a record having a non-empty value

Picking the most complete set of values across the record

Conducting a test based on the combination of values to decide which fields to copy from a loser to a winner

You need automation to do this well.

Is there a better way?

If this sounds like a lot of work, it is! To make it easier, consider a dedicated system that solves the problem of duplicate records like Validity, Cloudingo, or Syncari (full disclosure: as a Product Manager at Syncari, I’m going to suggest that option).

Stepping back from tool selection, there is a bigger issue here. Designing a data model that facilitates deduping and merging is a prerequisite for using any system that helps you with a process to remove duplicates.

Understanding how you manually identify, duplicate, and combine fields from winning and losing records is the first step to automating a solution so that you don’t spend all of your operations time manually resolving duplicates.

What’s the takeaway? Duplicates are something that we need to deal with in all go-to-market systems. To handle this, build a rubric for comparing a set of fields to determine when you find duplicate records. You will also need a strategy to combine fields from duplicate record sets and exception conditions to prevent certain records from entering the merge process.

Links for Reading and Sharing

These are links that caught my 👀

1/ Information Snacks - A picture is worth a thousand words (or something like that). When you are sharing user stories internally or externally, it helps to have a visual template to organize the relevant information and make it consistent. The team at Posthog created this great example.

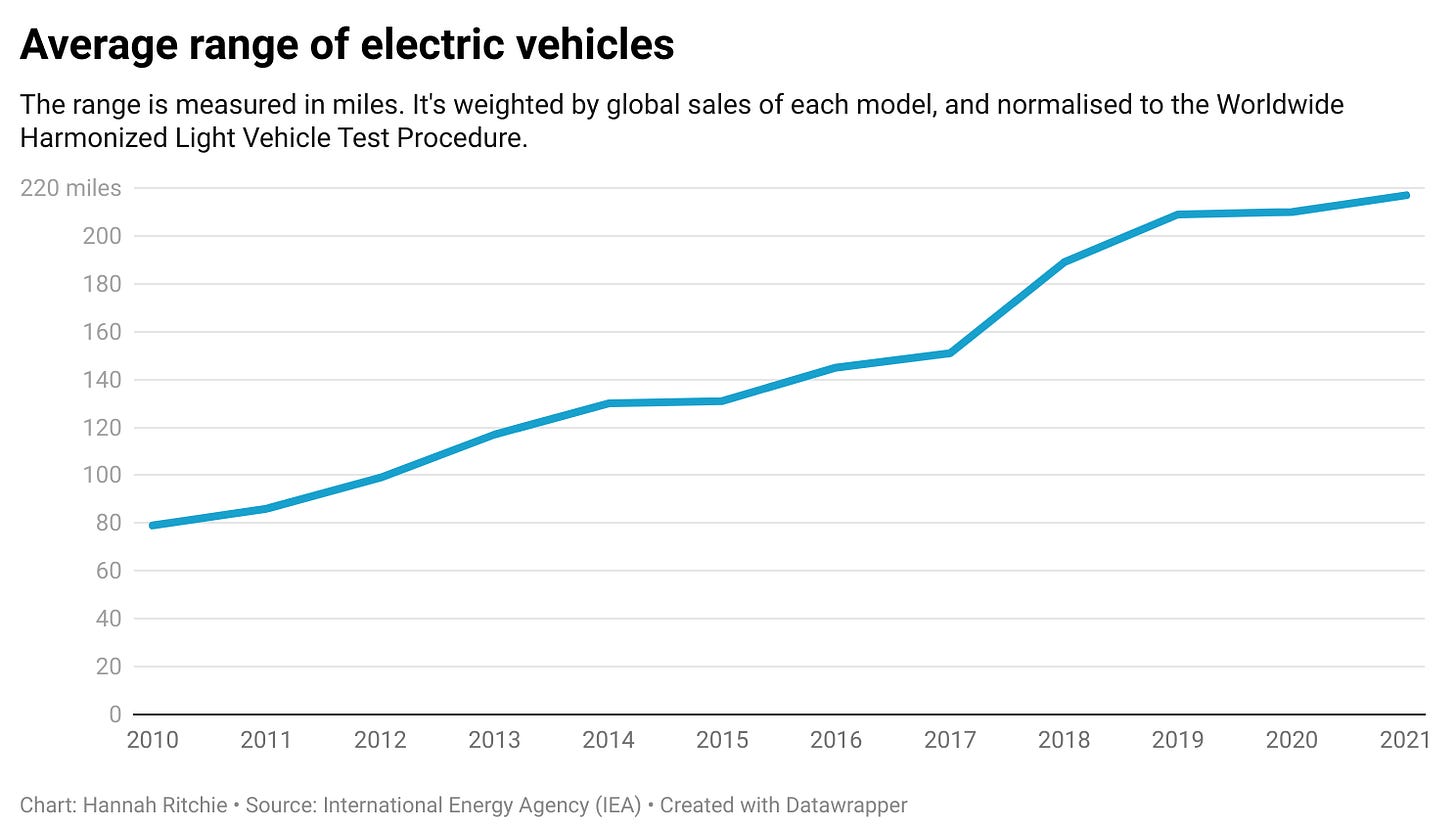

2/ EV Range Anxiety is getting … less anxious - The range of electric cars is getting steadily bigger. Even accounting for the miles you lose in cold weather, EVs are becoming a better alternative to city cars. Now, solving the charging network problem is the biggest barrier (along with affordable pricing) to mass adoption.

3/ Be cautious about using GPT for search - Janelle Shane tested out the new Bing search and found that it consistently makes things up. I think this points to a larger challenge with large language models. We need to train them to answer questions and also how to vet their own answers according to known rules or microservices that will help them to fact-check information. A machine learning model that suggests the best next alternative does not have a built-in way to validate itself. Perhaps a “mechanical turk”-like method using people (or machine models) will help here.

What to do next

Hit reply if you’ve got links to share, data stories, or want to say hello.

Want more essays? Read on Data Operations or other writings at gregmeyer.com.

The next big thing always starts out being dismissed as a “toy.” - Chris Dixon