Three prompt templates that make your AI actually useful

Is your chatbot helping you to level up? Use structured prompts in your workflow to uncover what's holding you back. Read: "Everything Starts Out Looking Like a Toy" #274

Hi, I’m Greg 👋! I write weekly product essays, including system “handshakes”, the expectations for workflow, and the jobs to be done for data. What is Data Operations? was the first post in the series.

This week’s toy: Deadstack is a fun take on a news aggregator that shows you recent stories and groups articles that are similar (report on the same story). It makes it easy to see the coverage of a tech issue as it’s happening.

Edition 274 of this newsletter is here - it’s October 27, 2025.

Thanks for reading! Let me know if there’s a topic you’d like me to cover.

The Big Idea

A short long-form essay about data things

⚙️ Build a chat assistant that learns your flow

You’ve seen this movie before when you arrive again at your AI assistant home page. It’s really easy to find a number of a half-finished chat that never turned into anything useful (and reusable). Most people still use AI assistants like a search bar with better grammar.

They throw in a question, get a decent answer, and move on. But that’s not collaboration. It’s copy-paste cognition.

If you want AI to think with you, not just for you, you need to start treating your prompts as functions. That means creating reusable building blocks that give your assistant memory, structure, and continuity.

I recently participated in a 90 minute workshop with Dr. Jason Womack where a group of people ran through two rounds of prompting to understand more about our meta-cognition. We wanted to know more about how we interact with Chat Assistants and how to use that feedback to inspect our own thinking process.

The setup:

15 minutes to orient us to a challenge to design an experiment to learn more about how we prompt

30 minutes for session 1, where we tried a prompt and then reported out on the result

30 minutes on a repeat with a different prompt and a readout

Here’s how I responded to that prompt.

Moving from one-off prompts to a thinking system

In 2023, we thought about Assistants as “one-shot” responses, similar to the way that you would use a search engine. But with the advent of memory across chats, you can use this persistent layer to both learn from existing chats and to create reusable responses.

Before memory (and your reusable prompts), you can think of you AI assistant as a junior teammate. Every time you start a new chat, you’re either training that teammate or starting over from scratch.

Structured prompts make that process easier. They turn conversations into a repeatable process so your assistant gradually understands your voice, your goals, and your decision patterns. Over time, you build a library of cognitive shortcuts. These are reliable ways to explore ideas, refine thinking, and close each loop with action.

When you don’t follow a repeatable process

You don’t have to be a systems thinker to build a system for getting better. However, you need to start by not settling for a one shot answer from your assistant.

Most people prompt once, get an answer, and move on. They treat each conversation as a fresh start instead of part of an evolving workflow. Without a repeatable process, there’s no way to improve the quality of thinking over time. You can’t see what worked, what didn’t, or how your assistant could learn from the last round.

AI can often feel inconsistent because it’s mirroring the input it receives. This is often our inconsistent input.

The problem isn’t the use of an AI model as an assistant. It that we didn’t have a method in the first place.

Prompts as reusable components

In our workshop, we built a new workflow to treat prompts like reusable components instead of disposable questions.

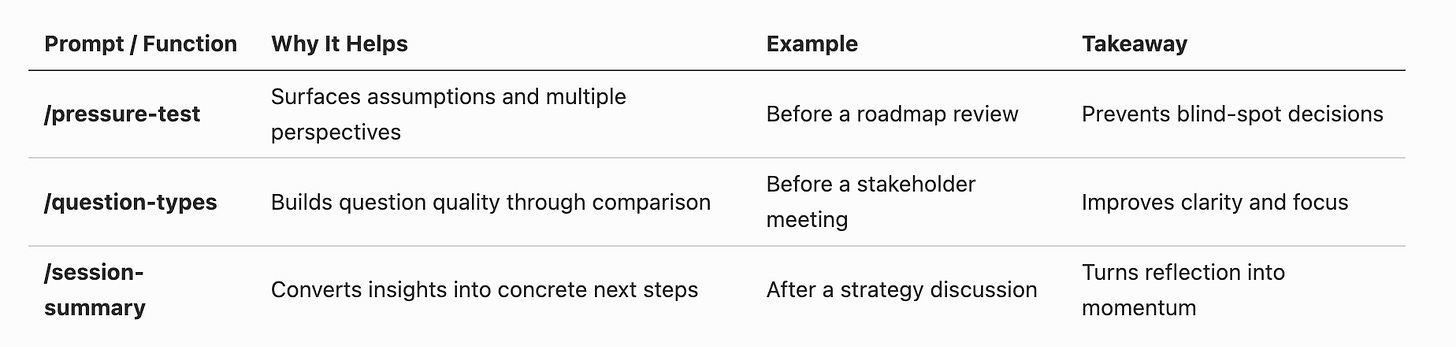

Here are three “prompt functions” that turn your assistant from a clever tool into a reliable collaborator.

Note: - we are prefacing these commands with a “/” like “slash” commands that you would see in Slack or on the command line because you can use this method to teach your assistant to follow these commands.

If your command was “/pressure-test” you could say:

/pressure-test my idea to start the new project next week instead of two weeks from now

Let’s walk through our examples.

Start by /pressure-testing new ideas

When you’re about to launch something — a feature, a campaign, a workflow — don’t ask, “Is this good?”

Ask your assistant to help you pressure-test it instead:

/pressure-test Restate my idea in your own words and list 3 assumptions you think I’m making. Then analyze it from two opposing perspectives — an optimist and a skeptic. Pause and ask me which feels closer to reality before continuing. Based on my answer, suggest the next two questions I should explore.

Why it helps: You surface blind spots before committing. The pause forces reflection instead of premature certainty.

Example: You could run this before presenting a roadmap update. The optimist’s view might emphasize opportunity size, while the skeptic’s view could surface adoption risks. Seeing both helps you refine your narrative before you ever hit “send.”

Upgrade how you ask with /question-types

Better questions yield better answers.

Use this prompt to experiment with how you frame them:

/question-types Generate five question styles (diagnostic, hypothetical, comparative, data-seeking, reflective) about [topic]. Give one example for each. Let me pick two styles to explore. For each chosen style, ask your question, wait for my answer, then help me refine it to push the conversation further.

Why it helps: It builds your questioning muscle and makes your assistant a dialogue partner, not a search engine.

Example: You could run a question lab before a stakeholder review. Maybe you start with “What’s blocking progress?” and refine it into “Which blockers repeat across quarters — and what makes them persist?” That re-framing sharpens both your thinking and your meetings.

Use a /session-summary to turn insights into action

Knowledge fades without structure. End sessions by locking in what you learned:

/session-summary Summarize key takeaways in three bullets: (a) what I learned, (b) why it matters, (c) a next experiment to try. Then ask me to rate confidence (1–5) and explain my rating. Use that to suggest one follow-up action and one reflective journal question.

Why it helps: Reflection cements learning and connects each insight to a concrete next step.

Example: You could use this after a long strategy session. Instead of closing the tab, have your assistant outline what stood out, why it matters, and one experiment to try next week. The reflection loop makes progress visible and repeatable.

Pick one prompt and run it end-to-end today.

Version it. Note what worked. Adjust and repeat.

You’re not just training a chatbot — you’re training your future process.

Because the goal isn’t to prompt better.

It’s to think better — consistently, iteratively, and together.

Prompt Summary

What’s the takeaway? Your AI assistant can be a really capable partner when you build it tools. The more consistent your process, the smarter your assistant becomes, and the more you realize the real learner in this partnership is you.

Links for Reading and Sharing

These are links that caught my 👀

1/ To future you - A poignant letter to a young person about AI. Cedric Chin manages to be clear-headed, somewhat optimistic, and pragmatic at the same time in this excellent essay. Read it, and you’ll be enlightened with his take on the place of AI in future tech, work, and life.

2/ What could possibly go wrong? - I enjoyed this though piece on how to debug AI agents when exploring automated data visualization. Put simply, it’s complicated to make a product that displays unexpected information, and even more challenging when you introduce agentic workflows.

3/ Bright lights - Learn more about the lost art of neon signs - it’s not a stretch to say that this short piece is illuminating (yes, there’s a dadjoke for any occasion).

What to do next

Hit reply if you’ve got links to share, data stories, or want to say hello.

The next big thing always starts out being dismissed as a “toy.” - Chris Dixon

This is very helpful. I’m always looking for better ways to build prompts.