How much AI *should* you add to your product?

You might be asking the wrong question when you ask how to plug in AI, instead of thinking: how can I make my product simpler, and easier to use? Read: "Everything Starts Out Looking Like a Toy" #232

Hi, I’m Greg 👋! I write weekly product essays, including system “handshakes”, the expectations for workflow, and the jobs to be done for data. What is Data Operations? was the first post in the series.

This week’s toy: one of the more difficult CAPTCHA problems I’ve seen. You need to play Doom and kill 3 monsters to solve it. Edition 232 of this newsletter is here - it’s January 6, 2025.

If you have a comment or are interested in sponsoring, hit reply.

The Big Idea

A short long-form essay about data things

⚙️ How much AI *should* you add to your product?

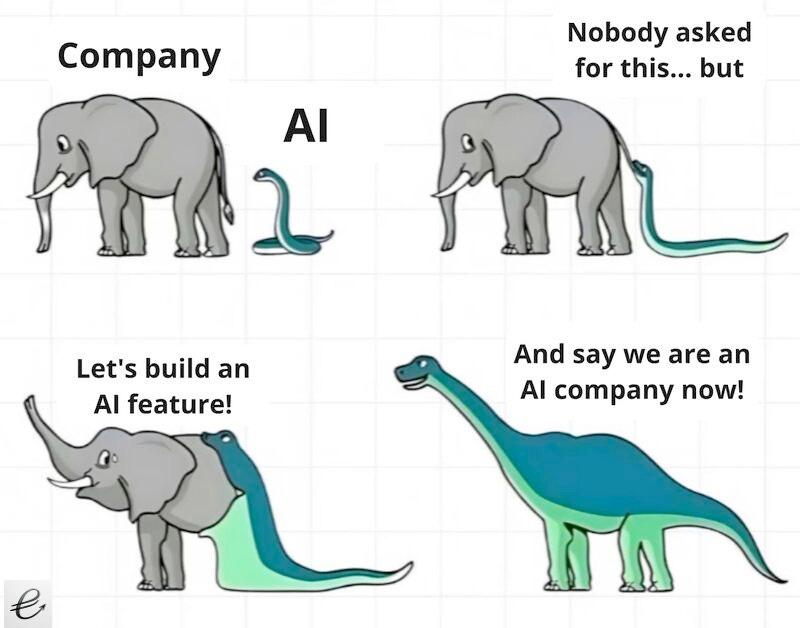

Open up your favorite app these days, and it feels a bit like the meme below. Use AI to improve your writing. AI can give you ideas to get started. AI will solve all of your problems!

AI feature creep is real, and it doesn’t feel organic. It feels like a technology in search of a problem. The “AI” part of the feature seems more important than actually solving the feature need.

What would it look like to take a more holistic approach?

Balancing paving cowpaths and building new roads

In a perfect world, AI features would enable new capabilities that were not possible before. These features would also remove the need for unnecessary work, and deliver both productivity and tangible benefits to the software user.

Because these outcomes are potentially so different from how we engage with software today, we lean on current metaphors. We’re not sure about the interfaces, triggers for actions, inputs, and outputs for the future. The result? We stick with the “cowpaths” that are well trodden already.

At a certain point, that means moving away from existing software design while keeping the same design principles:

Simplicity: keep features as simple as possible, but no simpler

Functionality: do what’s promised and avoid anti-patterns

Beauty: make features pleasing to use and review

Consistency: each feature uses a familiar design language

When you consider adding AI features to existing software, how do you know where to focus? Start with making those systems open to change.

Pietro La Torre describes how to spot “Legacy Systems” this way:

here’s how to spot neo-legacy solutions:

High cost for simple changes

Complex and expensive integration with other systems

Struggle with data extraction and accessibility

Building new software that takes advantage of AI is the inverse of those legacy systems:

Change needs to be fluid, constant, and inexpensive

Integration is built in and easy

Data Models are defined and interoperable with other systems

One feature at a time…

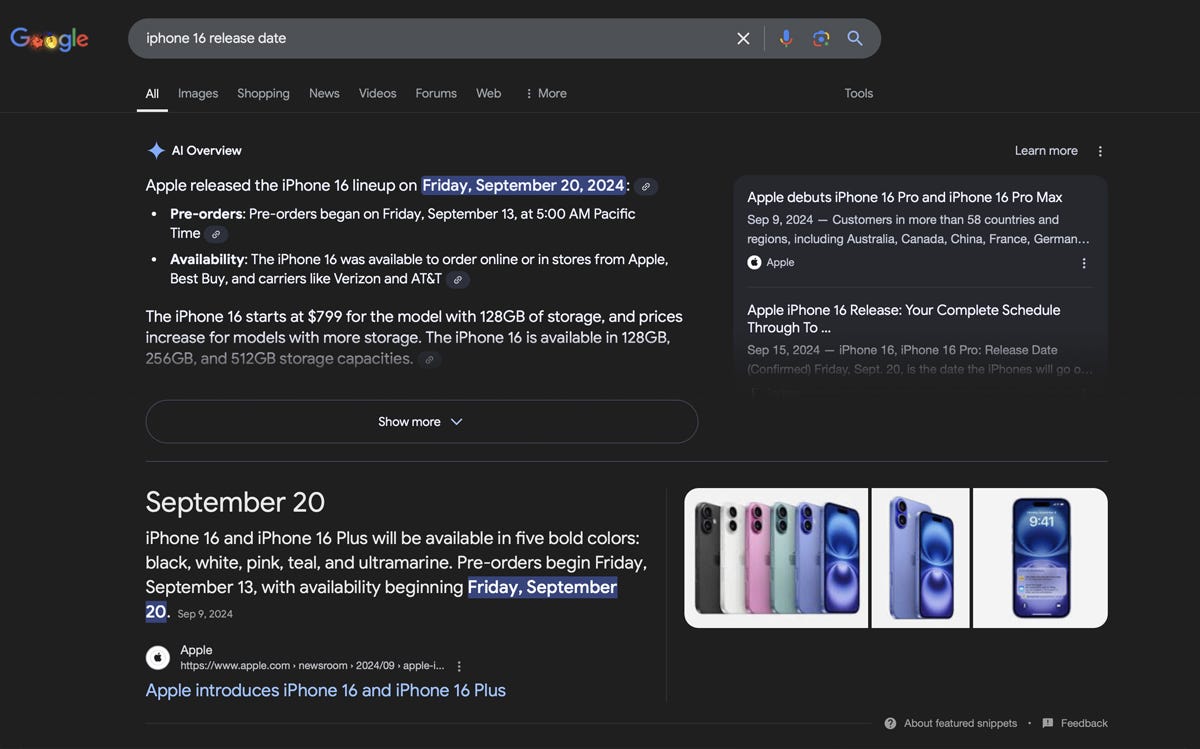

This is all pretty abstract, and it’s easier to consider with an example. Take Google’s search page, which is vastly different than it was back in the days of a single search bar.

What do you see? I see a variety of results and not enough visual clues to know which results are “good”, “ad-supported”, “Ai-driven”, or even which ones would work best for me.

Chuck Nelson compares OpenAI’s search to Google’s by demonstrating its simplicity: “The visual clutter is not there because it's a conversation, not a list. It's one answer instead of 10.” There are a lot of factors driving the way that the Google search looks like today (and probably a lot of product teams), and not many of them are focused on the customer.

The customer wants … to get a good answer when they search. Broadly speaking, they don’t care how it happens, as long as it’s a good result.

An AI-driven version of that search might be fine, but it needs to feel like the kind of search result you expect. In OpenAI’s version, it’s the result of a conversation with a bot instead of an exhaustive list of results. Notice we don’t deliver a list of categories or open lots of windows with results - neither of those patterns match what people expect when they “use a search engine”.

Making that experience better has to have more substance than presenting the results as a pirate limerick (which OpenAI will happily do if you ask it to use that format.

A differentiated search experience powered by AI might look like giving you additional context for:

why that link was picked for you

how you can learn more

whether this search result was customized for you or similar to the result others would receive

Keep the customer as the end in mind

And that’s the message to keep in mind for AI-driven features.

They work when they deliver benefits for the customer that align with what the customer wanted to do. AI features for the sake of AI don’t make sense because adding technology often adds clutter instead of delivering value.

In the OpenAI vs. Google Search example, the customer wins … but only if the answers are also correct, reliable, expected, and understandable. When the technology (AI) simplifies the task and still provides a delightful experience, that’s the right amount of AI to add to your product.

Seeing a ✨ delivers a sparkle because it’s still relatively new. Unless features enhanced by AI also deliver better value and less complexity,

What’s the takeaway? When you’re asking how much AI you should infuse into your product, the answer shouldn’t be a measure of AI. The right question: does it get the job done in an expected way for the customer and deliver delight?

Links for Reading and Sharing

These are links that caught my 👀

1/ Made for binge watching - Will Tavlin’s “Casual Viewing” essay on Netflix is sticking with me this week. It’s the best explanation yet that I’ve read that captures the feeling of “500 channels and nothing’s on” while also making a case that that abundance of content is the point. Like a fast food restaurant producing reasonable facsimiles of an expected burger or coffee drink, Netflix’s job is now not to offend rather than to inspire or awe.

2/ Socials must be losing users when… - At least one major platform (Meta) is inventing new main characters for us to “follow” and “interact with” and those characters are completely fictional. (h/t Dave Karpf)

3/ Ask LLMs to “make it better” - Can you get better results for a structured task simply by asking LLMs to “make it better”? Signs point to yes, although there are diminishing returns to keep asking and you need to know how to identify “better”. (h/t to Joe Reis for this link)

What to do next

Hit reply if you’ve got links to share, data stories, or want to say hello.

The next big thing always starts out being dismissed as a “toy.” - Chris Dixon