How to introduce AI agents into operational workflows

Adding AI agents to workflows? Start with simple, rule-based AI agents in workflows, then gradually introduce "thinking" agents with guardrails. Read: "Everything Starts Out Looking Like a Toy" #266

Hi, I’m Greg 👋! I write weekly product essays, including system “handshakes”, the expectations for workflow, and the jobs to be done for data. What is Data Operations? was the first post in the series.

This week’s toy: one of the best ways to explore code is to build something fun. For example, draw a character from the classic video game Space Invaders. Edition 266 of this newsletter is here - it’s Sept 1, 2025.

Thanks for reading! Let me know if there’s a topic you’d like me to cover.

The Big Idea

A short long-form essay about data things

⚙️ How to introduce AI agents into operational workflows

It's a familiar refrain at this point. You know you need to bring AI agents into your workflow. Without a doubt, agents are able to take action more reliably and more cheaply than humans, if you know what you're asking them to do. So the real question here is not if you want to introduce agents into the flow but how you want to achieve the goal.

Unlimited access to a process is almost always a bad thing, so it would be helpful to think about how to introduce agents with guardrails. Creating agents that act in controlled ways gives you a proof point to introduce the concept of an AI agent into a workflow as an actor. Then, you have a place to introduce a "thinking step" for the same agent while maintaining a limited set of actions that agent can take.

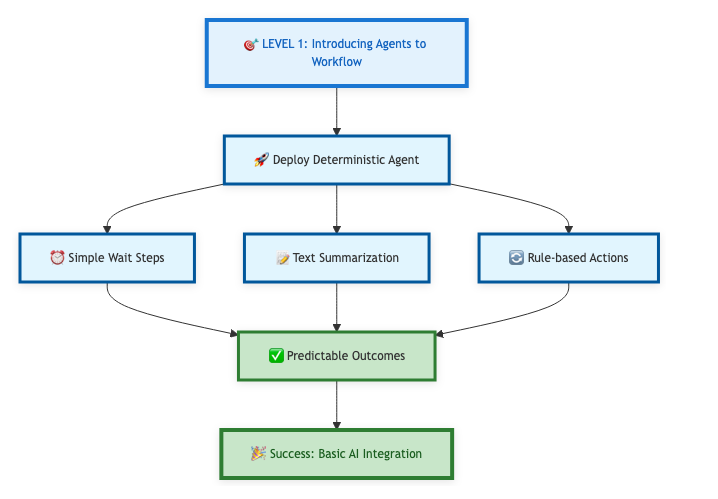

Here's the blueprint for adding agents. You start by defining a process and then identify the most likely step that doesn't need to be done by a person.

Deterministic agent vs. thinking agent

Let's qualify what we mean by "agents" in AI, as there a lot of terms people might associate with this type of code. At the highest level, you can think of agents falling into two broad buckets: deterministic and thinking.

Deterministic agents are precise tools. They're given a prompt, a narrow set of tools, and a defined stopping condition. When they finish, they announce that they're done and hand control back to the workflow.

Thinking agents are closer to junior colleagues. They still operate within guardrails, but they have more freedom to make decisions about how to accomplish a task. They may choose between multiple paths, apply judgment, or adapt to context. In a workflow, they also need to signal when they've finished a job or cannot continue.

Both types of agents are useful. Deterministic agents are similar to existing code because they output known values. Thinking agents might also produce known values, but they use an entirely different method to get there.

When you introduce a thinking element, you're expecting the system to be more creative. You're also expecting some non-standard output. The advantage of a deterministic agent is that you only get the type of output you expect.

Putting deterministic agents to work

Deterministic agents are a good choice to insert into an existing workflow that has clear rules and that you could execute with code. One example of this is using an agent to run a "wait" step. If you have a ticketing system where you want to delay escalation until a customer responds, a deterministic agent is good at a condition like ("wait 24 hours" OR "wait until... you get a response").

Looking at this, you might say ... "how is this different from a code step that waits 24 hours or a step that responds based on the arrival of another event in a queue?" The main difference here is that the agent framework lets you define a prompt to give you a deterministic outcome and lets you add conditions by changing a prompt.

Another example of a deterministic outcome is text summarization. A deterministic agent might take a long support conversation and output a three-sentence summary. It doesn't decide what to do with the summary; it's job is to produce an outcome. The cost of failure here is pretty low, because the workflow can always continue.

If you're bored with the idea of a deterministic agent, don't miss the point. Inserting an agent as a peer in a workflow is a big step. It means you can do other steps in that workflow with an agent.

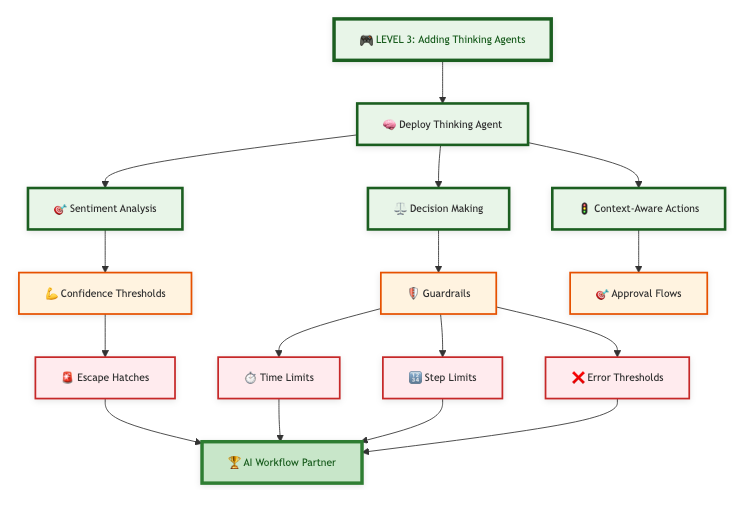

Considering thinking agents

Deterministic agents let you set a baseline for the ability to add agents to a workflow. But what you'd like to do is add an element of thinking to the agent action. "Thinking" is a bit of a misnomer because you're asking a pattern-matching process to match the most likely outcome to the prompt you've given.

Here the AI has more autonomy and also operates under clear boundaries. Consider a support workflow where tickets should escalate if the customer is frustrated. A thinking agent could run sentiment analysis, combine it with the account's SLA, and decide: if negative sentiment + overdue response, then escalate to Tier 2 automatically.

Unlike the deterministic "wait step," this decision isn't preprogrammed. The agent is exercising judgment within a narrow frame. It's not rewriting the playbook, but is able to take a call between options.

Workflows become more interesting when the "dumb wait step" can be improved by a classification or reasoning step. The thinking agent doesn't just move forward by doing work; it helps you make choices that would otherwise require a human operator.

Why simple steps matter

Adding a thinking step sounds like a great idea. It also has more variability than a deterministic step. This might be obvious to you, and it's important to limiting the risk when you get started.

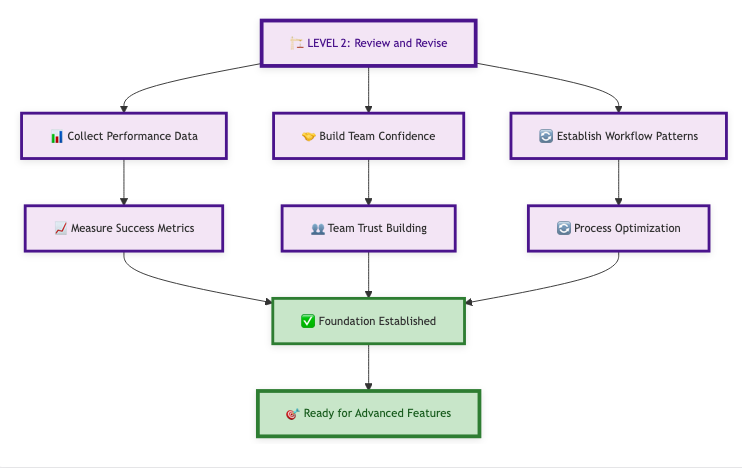

Once you have an agent contributing in workflows, teams begin to trust the output, and you start the flywheel of "how can agents contribute?" Successful outcomes drive confidence that you could give agents additional workflow steps.

It makes no sense to begin by having an all-agent workflow. But once you have basic tasks, evidence of success, and the opportunity to increase responsibility, you've got a repeatable pattern for success.

Introducing thinking steps

A "thinking step" means the agent can suggest or take bounded actions where the risk is manageable.

You can build guardrails around these steps with thresholds, confidence levels, or approval flows.

For example:

If the AI is more than 90% confident in sentiment analysis, escalate automatically.

If confidence falls below that threshold, flag for human review.

This way, the AI contributes real decision-making power, but never exceeds the boundaries you set.

Handling incomplete work

Letting agents make decisions raises an obvious question: how do you help them when they can't finish a job? Without a framework to know when they are done or another kind of bound, they might loop. You need to tell them a limit like take only x seconds to finish the work, try 4 times, or to "take your best guess and then hand it off."

Agents live in a probabilistic world. They might spin in circles, run out of context, or hit an unexpected edge case.

That's why it's critical to design escape hatches. Agents should have clear rules for when to stop and report back:

Time limits (stop after 2 minutes).

Step limits (stop after 10 actions).

Error thresholds (if three consecutive errors occur, exit).

When an agent hits these guardrails, it should fail gracefully. That looks like alerting the system or a human that it couldn't complete the work, and hand the task back. This keeps workflows resilient and prevents runaway behavior.

Building the path forward

Introducing AI agents into workflows doesn't have to be a leap of faith. Start small with deterministic steps that everyone understands, then gradually expand into thinking steps that act within boundaries. Along the way, make sure agents know how to declare victory—and how to admit defeat when they hit a wall.

That's how you build trust, layer by layer, until AI becomes a reliable partner in the flow of work. The only question left is: where in your workflow will you give your first agent a seat at the table?

What's the takeaway? Start with simple, rule-based AI agents in workflows, then gradually introduce "thinking" agents with guardrails. Build trust incrementally to avoid disrupting existing processes.

Links for Reading and Sharing

These are links that caught my 👀

1/ Ratings can be gamed - this might be a SPOILER for some people. Lists where people rank things (like movies) can be manipulated, and Rotten Tomatoes is the most recent candidate. The median movie review used to be a lot more spreads out before the site was owned by Fandango. Now the scale is compressed.

2/ When AI tokens get cheaper - in retrospect, it’s so clear. The tokens used for GPT2 or GPT3 are so much cheaper. Why don’t people use them now? Because there is a new model in town. Enter Jevon’s Paradox → the tokens are cheaper, but usage soars. The economics of token usage are complicated.

3/ “Check the data warehouse” - when you need to query data, do you use a replica of production or the data warehouse? It’s not always easy to decide, shares Emilie Schario. Knowing the canonical definition of a metric is easier in a warehouse, and sometimes you need to look at the actual operational data to see what’s going on.

What to do next

Hit reply if you’ve got links to share, data stories, or want to say hello.

The next big thing always starts out being dismissed as a “toy.” - Chris Dixon