Why does writing to a Chatbot seem familiar?

We must all be waiting for new answers to our questions, because everyone's asking a bot. It turns out we're already used to texting computers. Read "Everything Starts Out Looking Like a Toy" #145

A HUGE thank you to our newsletter sponsor for your support.

If you're reading this but haven't subscribed, join our community of curious GTM and product leaders. If you’d like to sponsor the newsletter, reply to this email.

Brought to you by Pocus, a Revenue Data Platform built for go-to-market teams to analyze, visualize, and action data about their prospects and customers without needing engineers. Pocus helps companies like Miro, Webflow, Loom, and Superhuman save 10+ hours/week digging through data to surface millions in new revenue opportunities.

Hi, I’m Greg 👋! I write weekly product essays, including system “handshakes”, the expectations for workflow, and the jobs to be done for data. What is Data Operations? A discussion that grew into Data & Ops, a fractional product team.

This week’s toy: That shuffle button in your apps? You’re not sure exactly how it works? Here’s one engineer explaining why Spotify shuffle is not truly random. I’ve often wondered how it’s possible to get the same queue item played again when you’re playing shuffle and going back and forth in the queue. This is a good example of creating some element of novelty, without too much randomness. Edition 145 of this newsletter is here - it’s May 15, 2023.

The Big Idea

A short long-form essay about data things

⚙️ “I’m sorry Dave, I can’t do that”: Why talking to a Chatbot sounds familiar

If you remember HAL 9000 from the movie “2001: A Space Odyssey”, you’re a film buff who likes watching Stanley Kubrick movies, or you’re now old and need to update your film references. For the people who have never seen this movie, one of the key plot points is a computer that on taking voice instruction, decides to stop listening to the humans (hijinx ensue). The point to take away is that it seems quite natural to delegate certain activities to computers, especially when high levels of computation and understanding are involved in a short period of time.

It is also a reasonable assumption that we make today when we talk or chat with computers. When they seem too familiar, they cross the “uncanny valley” as our brains get confused momentarily between the task of talking to a person and talking to a machine. But it’s also a really efficient way of getting stuff done.

Our queries are already text-based

In the last three years, many more of our interactions have moved to a text-based interface. It takes less time to get started, can be done asynchronously, and allows you to connect with many more people than you could reach otherwise.

These inquiries start with a chat or a text. Quick question? Have a minute? You might not like the way we’ve moved from an in-person meeting to a video call to a document to an email or a text, but we’re there.

Perhaps you solved your last problem with a series of rapid questions over Slack, text, or Teams because you’re used to a back-and-forth method of problem-solving. The problem with this mode of interaction? It’s really distracting to other people when they are engaged in deep work - research suggests it might take more than 20 minutes to reset and return to the problem.

That means when you ask someone to help you, it’s a lot more effective if you’ve already thought of a high-value question to ask them. If you don’t know how to focus on that item, how do you get started? One way is to use ChatGPT or another chatbot to reflect back on your question.

Chatbots don’t get tired of answering your dumb questions

When you ask ChatGPT a question, it answers almost immediately. (The first time, it sort of feels like magic, until you realize the cadence and style of the response.) You can ask your question over and over again and iterate it until it makes sense.

ChatGPT is a tutor that never gets tired of hearing your question. That’s one reason innovators like Khan Academy are incorporating chatbots into their core product. Let’s not forget that there are clear economic benefits to outsourcing an answer to a computer. But the computer answers a lot of the time correctly when asked a templated question. So why should you be cautious when you ask your dumb question?

ChatGPT – and other chatbots that work on LLM (large language models) – are not infallible. They are returning the most likely answer based on the words (tokens) you have provided in the order and structure that you provided against the model where they’ve been trained.

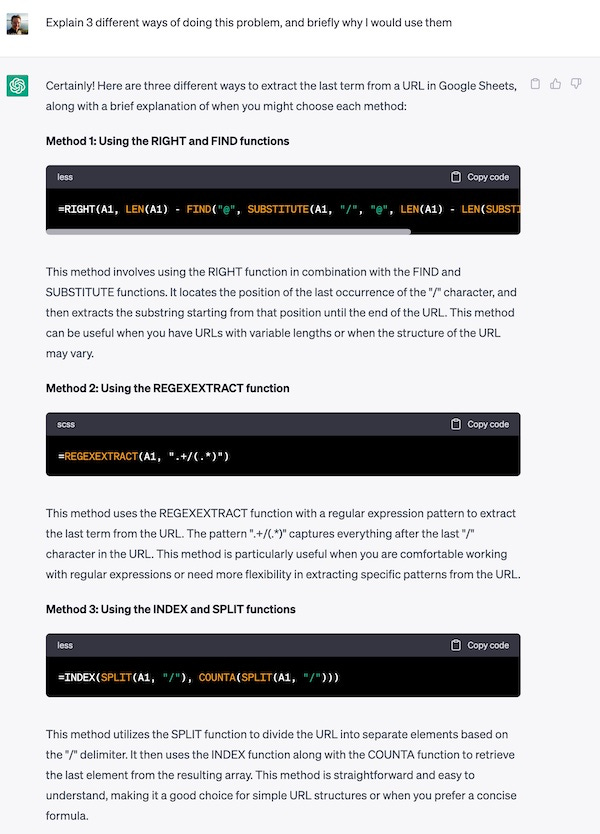

Let’s take a simple example of writing a formula in Google Sheets to get the last term from a URL, or extracting the name of the URL from the whole thing.

ChatGPT does a great job at this pretty specific task. If you ask it a more general question like “How do you write google sheets formulas to get different parts of a URL”, you get results and strategies that work but use very different functions.

As a spreadsheet writer, how would you know whether to use INDEX, SPLIT, REGEXEXTRACT, MID, LEFT, SEARCH, ISERROR, LEN, or many other functions to solve your issue? (There are many more solutions to this kind of problem, too). If you’re new at doing this you would have absolutely no idea how to solve the problem.

Perhaps you would start by reading the function documentation as provided by Google. You could search for an answer that sort of matches the task you are looking to solve. You could ask a friend. And you could keep working on your problem until you make it work. Or you could iteratively solve the problem.

Chatbots don’t check your work

One of the big advantages of asking a bot like ChatGPT about a problem is that you can go beyond the first level of the solution to understand better what is happening. It doesn’t care whether you start with the solution, ask it to explain why, or start from a more general-purpose task and then talk about specific ways to solve the problem.

The superpower of any chatbot is the ability to ask it “explain why this happens.”

For the example we discussed above, here are a few more ways to accomplish the same thing and some reasons why you might want to use another method.

Caution: you still don’t know whether these approaches work.

But you do have several things that you can try and test to get closer to the solution, and you haven’t asked someone for advice yet. This is especially important when you are debugging a technical question or if you don’t know how to start with a problem.

If the problem is generally straightforward, ChatGPT will keep explaining to you until you get down to the first principles of the problem and walk you through how to get to the answer it provided. You need to ask it to explain, though.

Now you’re ready to talk to someone

Once you have an idea to solve the problem and you’ve tested your answer, you might not need to ask someone else. But it’s probably a good idea to test your assumptions, especially when it’s the first time you’ve tried to solve this problem.

I’m not currently worried if GPT will refuse to open the pod bay doors. I am worried about people using the information it spits out without reviewing it or testing their intuition. You might not know if a technical answer is right, but a search on a search engine probably will save you some arguments when the AI hallucinates.

Using chatbots for good

So as a person who needs to use chatbots (that would be all of us), what are some of the strategies that you need to use to get better answers? The first thing to do when you have a question is to ask ChatGPT to break it down into independent steps and ask domain experts when you don’t have a full understanding of what’s happening.

You can also use this method to become a domain expert on lots of well-known subjects by asking ChatGPT to be your tutor. Once you have a repeatable test, ask about another part of the problem.

ChatGPT is also good at helping you understand your unknown unknowns. If you ask it to tell you what you haven’t thought about and imagine some of the things that might go wrong it can provide valuable perspective.

Why does it feel familiar to talk to GPT? It’s almost like talking to a friend that you don’t know yet, who’s smarter than anyone. Where it starts to feel weird is when you realize that it doesn’t have context for your conversation and it’s just being “helpful” but the definition of helpful is matching a model, not necessarily giving you the answer you think you need.

What’s the takeaway? Chatbots and AI-supported conversation is here to stay. We need to get used to the idea of talking to computers while remembering that they are programmed to answer us in particular ways. We can use that to our advantage by asking them to explain unfamiliar concepts until we grok them better, but it’s our job to verify that the statements are right.

Links for Reading and Sharing

These are links that caught my 👀

1/ Explaining statistics is an art - Olga Berezofsky does a great job explaining how to do linear regression and correlation analysis. This is an article I’ll bookmark for the next time I need to think about the right questions to ask when thinking about statistical analysis.

2/ Everything built on the data warehouse - If all of your data is already in your data warehouse, why move it to another place to transform it? That’s the idea posed by Barr Moses on “zero etl”, another way of saying “keep your logic in the warehouse.” The advantage? Data sharing through Snowflake makes it much easier to ingest data from other systems, and dbt is a solid way to show your ETL work (or more like ELT in this case) while documenting what you do for other tools. Will it become the dominant way to build apps? Ask again later.

3/ AI needs to show its work - Maneesh Agrawala gives a concise and vivid explanation for why AI observability is one of the most important topics of our time. Without knowing why a machine makes its decisions, it’s awfully hard to make it check its work as I advocated this week in my essay.

What to do next

Hit reply if you’ve got links to share, data stories, or want to say hello.

Want to book a discovery call to talk about how we can work together?

The next big thing always starts out being dismissed as a “toy.” - Chris Dixon